More to the Point: Does it Matter?

The release of Sora 2—and especially the Sora app—less than two weeks ago has caused a lot of hand wringing, and understandably so. It raises a lot of legitimate concerns, including copyright violations and lax decency guardrails, among others.

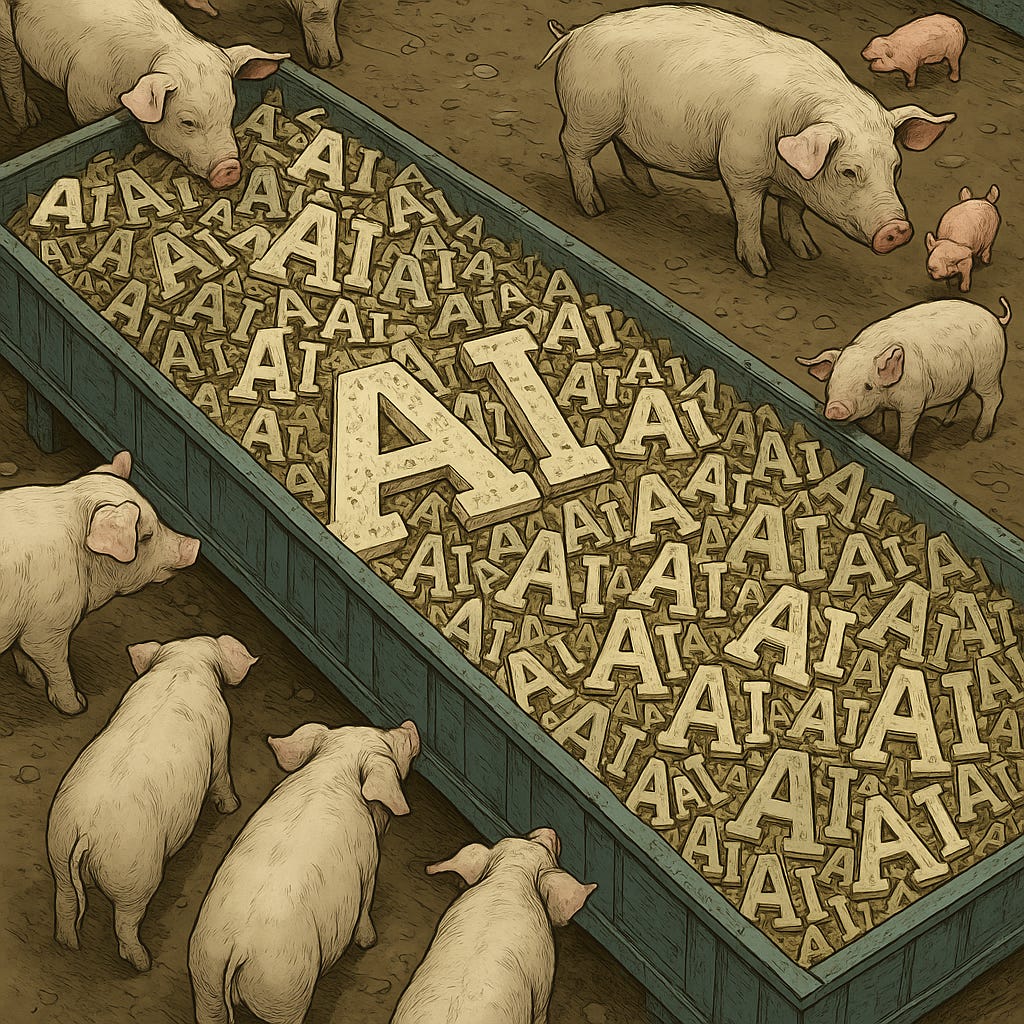

One of the most common criticisms is that it will propagate a vast amount of “AI slop,” meaningless drivel that will clog feeds and (further) degrade popular culture. Dismissing it as slop, however, risks underestimating the threat that it poses to corporate media. Most Sora content will probably have no redeeming value, but it will also produce stuff that is “good enough” to compete for finite consumer time.

Tl;dr:

-

Sora 2 is a technical leap forward from the first generation of Sora, but most notable is the Sora app.

-

Although OpenAI touts Sora 2’s ability to follow intricate instructions, the biggest innovation may be the opposite: its ability to construct cohesive shots with almost no guidance. This completely collapses the barriers to creation.

-

The Sora app raises a lot of legitimate concerns, including attention pollution, adverse impact on information integrity, copyright infringement, environmental impact, and effect on creator economy economics. But the most common criticism is that it will result in an infinite amount of “AI slop.”

-

Most of what is produced by Sora will be drivel with no redeeming quality, like most creator content in general. But dismissing all of it as “slop” is dangerous. It risks applying a value judgment or definition of quality that is out of step with consumers and also focuses on the average content, which is irrelevant. It is the best content that matters, because that’s what will compete for consumers’ time.

-

Even though Sora is still in limited access, some Sora-produced videos are already driving real engagement on Instagram. I show some of these below. One has 7.6 million likes, 16 million shares, and over 200 million views—likely putting it among the most-engaged Reels ever.

-

Something similar is happening in music, where AI-created music is getting traction on Spotify and AI-enabled covers are blowing up on YouTube, TikTok, and Instagram, driving engagement through likes, shares and reaction and stitch videos.

-

For the past three years, I’ve been making the case that GenAI will close the gap in production values between creator and corporate media and accelerate the ongoing low-end disruption of corporate media. Early signs suggest that some AI-generated content will be “good enough” to divert audience attention.

-

Call it slop if you want, but dismissing it is a bad idea.

Sora 2 Collapses the Barrier to Creation

A lot has been written about Sora 2, but I’ll quickly set the stage.

OpenAI shocked everyone when it first announced Sora in February 2024.1 As I wrote at the time (With Sora, AI Video Gets Ready for is Close Up), with its different approach to video generation (the combination of compressed spacetime patches and a diffusion transformer architecture), OpenAI proved that video was a technically solvable problem.

It still had limitations, especially realism (the “uncanny valley” is a very hard challenge), audio-visual sync (especially speech and lips), native audio (i.e., automatically generating audio to accompany video, like background sound, sound effects, etc.), and realistic physics (causal consistency, fluid dynamics, lighting, etc.). In May 2025, Google released Veo 3 and leapfrogged Sora along all of these parameters. So, it wasn’t surprising that OpenAI wouldn’t release Sora 2 until it could at least equal or pass Veo 3 in each.

OpenAI released Sora 2 on September 30 and it is currently available only to those who have an invitation. I haven’t seen objective evaluations of Sora 2 vs. Veo 3, but Sora 2 is comparable or better—and far better than the first generation of Sora—on a few measures:

-

Native audio and lip-sync

-

Improved physical realism

-

Character and object consistency across shots

The biggest surprise was that OpenAI released a Sora app (giving the app the same name as the model preserves OpenAI’s track record of confusing nomenclature, kind of like how there were three brothers on Newhart named Larry, Darryl, and Darryl). It is meant to be a social app and makes it very easy for users to insert themselves and, with permission, their friends, in short generative videos.

OpenAI touts Sora 2’s ability to follow intricate instructions, but its most impressive innovation may be its ability to follow almost no instructions at all.

In its announcement of Sora 2, OpenAI touted the model’s “leap forward in controllability” and ability to “follow intricate instructions spanning multiple shots while accurately persisting world state.” However, what’s so amazing about Sora (the app) is the opposite: its ability to construct a cohesive clip with the sparest of prompts.