Why AI-Optimized Brands Risk Destroying Customer Value

In June 2024, Noom launched Welli, an AI health assistant designed to provide personalized support at scale. Users quickly reported issues with generic responses and lack of meaningful personalization.

Within 18 months, Noom would execute 45% workforce reductions, face $62 million in legal settlements, and expand into pharmaceutical distribution, adding GLP-1 medication programs alongside their traditional coaching services.

But here’s what makes this more than a cautionary tale: Noom’s AI worked. It generated responses, optimized engagement metrics, and enabled coaches to manage hundreds of clients instead of the 7-15 typical at competitors. The technology performed exactly as designed.

Its failure was strategic, not technical.

Noom optimized for operational efficiency when customers hired them for human connection. They automated empathy when empathy WAS the product. They removed friction from an experience where friction—the work of self-reflection, the wait for thoughtful coaching, the conscious choice to log each meal—was the transformation itself.

Noom solved customer pain points while being completely blind to their passion points.

The Pattern That’s Reshaping Categories

Between 2023 and 2025, something unexpected happened. Companies that had raised billions—Noom, WHOOP, Oura, MyFitnessPal, and Zoe—and pioneered AI-driven personalization began showing signs of strategic distress:

-

$62 million in legal settlements

-

45-50% workforce reductions despite “efficiency gains”

-

Clinical research on “orthosomnia”—where obsession with sleep tracking undermines sleep quality

-

Approximately 30% of wearable users abandon their devices

-

University of Michigan and Stanford research showing human-plus-AI coaching outperforms AI-only approaches by 74%

These weren’t companies that failed to invest in AI. They were companies that deployed sophisticated technology without asking: Which problems should AI solve, and which problems does AI create when we automate the wrong things?

The distinction matters because most organizations are racing to eliminate customer friction without asking a more fundamental question:

Is this friction frustrating customers, or is it engaging them?

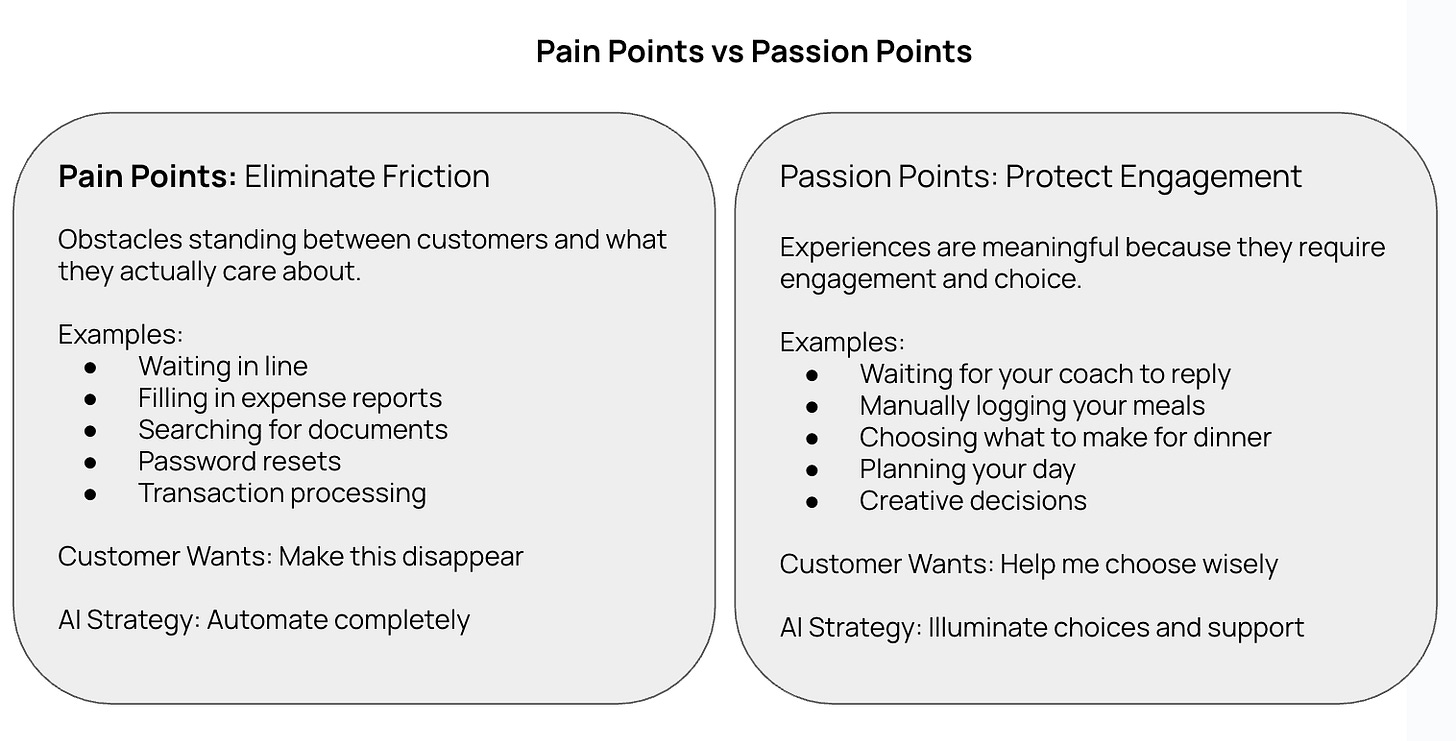

Pain points are problems customers want eliminated—the logistics, the tedium, and the obstacles standing between them and what they actually care about.

Passion points are experiences customers find meaningful precisely because they require engagement, choice, and human connection. These aren’t problems to solve. They’re the reasons customers showed up.

The most expensive strategic errors of the past two years came from companies that “solved” passion points as if they were pain points.

They optimized the meaning right out of their customer experience.

Why This Matters Beyond Wellness

If this were just a wellness industry problem, it would be concerning but contained. But the same pattern is emerging across luxury, financial services, healthcare, and any category where customer value depends on context, identity, or emotional resonance.

AI is remarkably effective at identifying friction and designing systems to eliminate it. That capability becomes dangerous when organizations can’t distinguish between friction that frustrates customers and friction that engages them—between the obstacle that prevents the experience and the texture that is the experience.

The opportunity isn’t in rejecting AI. It’s in developing what I call integration capabilities—the strategic architecture to know when AI’s answer is the wrong answer, when optimization destroys brand value, and when your competitors’ over-automation creates the opening you should exploit.

Figure 1. The Difference Between Automation & Engagement

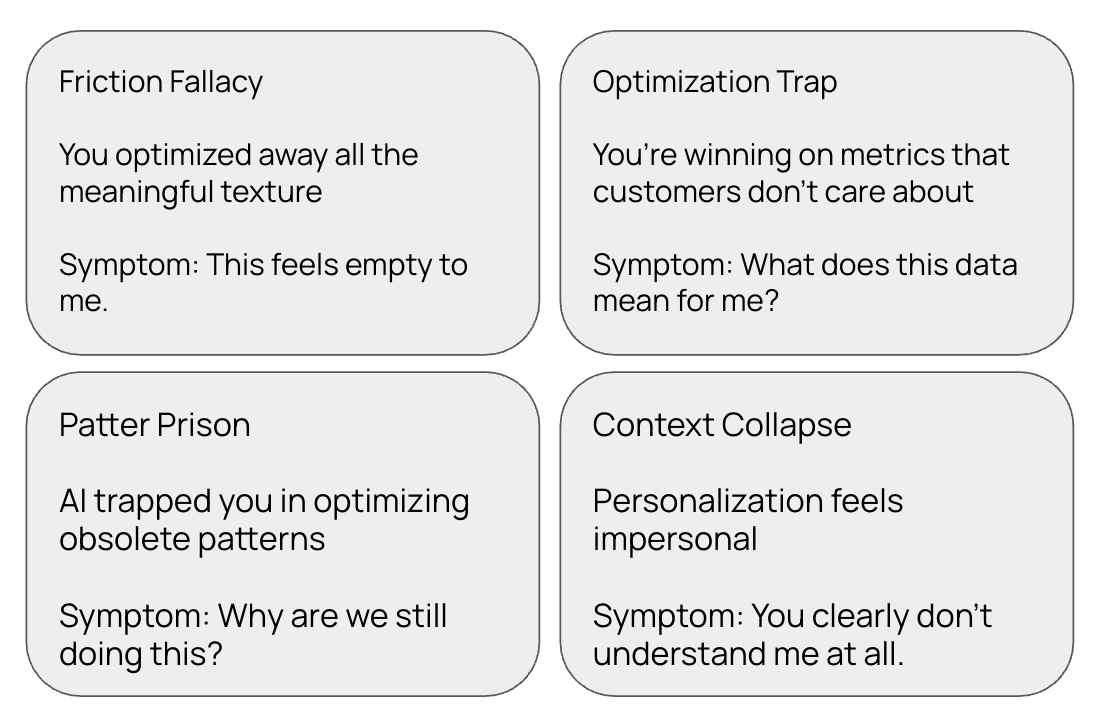

Four Patterns That Destroyed Billions

PATTERN 1: THE FRICTION FALLACY

You inadvertently optimize away the texture that makes customer experiences meaningful to them.

Noom reduced coach response times and scaled personalization. Users reported feeling “processed” rather than supported. One described: “AI became more and more prevalent and replacing real people felt so incredibly disingenuous and impersonal. I’m a real person doing HARD real-life stuff.”

Wellness transformation isn’t about convenience. It’s about commitment.

The “friction” Noom removed—manual food logging, waiting for coach responses, conscious meal choices—wasn’t inefficiency. It was the engagement that created behavior change.

University of Michigan and Stanford research proved this: human-plus-AI coaching achieved 74% better weight loss outcomes than AI-only approaches. Not because AI was technically inferior. Because the human relationship was the product, and automating it destroyed the value.

This is a passion point disguised as a pain point.

Customers didn’t want faster, more automated coaching. They wanted to feel understood, supported, and connected to someone who believed in their capacity to change.

Alarm bells:

-

User complaints shift from “this is hard” to “this feels empty”

-

Engagement metrics rise while satisfaction scores fall

-

Competitors succeed with “slower” alternatives emphasizing human connection

-

Your most committed users churn

Before automating any interaction, ask: If we made this instantaneous, would users feel accomplished or diminished? Are we removing an obstacle or removing the experience itself?

PATTERN 2: THE OPTIMIZATION TRAP

AI helps you compete brilliantly on dimensions that don’t matter

Oura built sophisticated algorithms analyzing HRV, sleep stages, body temperature, and activity to generate daily “Readiness Scores.” The technology was impressive. The strategy was flawed.

The problem: Oura optimized for measurement accuracy when customers needed well-being support. Their AI could detect a 2% variation in HRV but couldn’t answer: “Should I push through this workout or rest today?”

Users began experiencing what sleep researchers call “orthosomnia”—anxiety about sleep scores that interferes with sleep quality. The technology designed to improve sleep was contributing to sleep problems.

Meanwhile, WHOOP laid off 15% of its workforce in 2022. Later, when a $96 million military contract went to competitor Oura, WHOOP protested. The contract was ultimately cancelled. The competitive dynamics revealed a market questioning whether measurement accuracy alone justified premium pricing when behavioral guidance remained limited.

This is the Optimization Trap: AI helped these companies compete brilliantly on measurement precision—a dimension that turned out not to matter as much as they assumed.

Customers didn’t need more accurate HRV readings. They needed better answers to “What should I do differently?”

As one wellness coach wrote, “AI will not provoke ‘aha’ moments. Coaches listen for what is not said in session as well as what is said.”

Alarm bells:

-

You’re winning on metrics customers don’t discuss

-

Feature complexity increases while satisfaction stagnates

-

Competitors succeed with “simpler” offerings

-

Customer support reveals confusion about what your data means

Before optimizing any metric, ask: Does improvement on this dimension change customer behavior? If we dominated this metric, would customers choose us? Are we competing on what’s measurable or what’s meaningful?

PATTERN 3: PATTERN PRISON

AI locks you into optimizing yesterday’s patterns instead of anticipating tomorrow’s shifts

MyFitnessPal spent years perfecting AI-driven calorie tracking. By 2024, they had 200 million users and sophisticated personalization.

Then they removed their community newsfeed—the social features that had made the app sticky for core users. The rationale was classic: usage data showed newsfeeds had declining engagement compared to AI-driven features.

The algorithm was right about the pattern. The strategy missed what the pattern meant.

The newsfeed decline wasn’t because social features were less valuable. It reflected a broader shift: wellness culture was moving from public accountability to private support, from broadcasting progress to intimate connection. Users weren’t abandoning social features—they were seeking different kinds of social experiences.

Meanwhile, the category was shifting toward metabolic health (Levels, Signos), personalized nutrition (Zoe, InsideTracker), and GLP-1 weight management. MyFitnessPal’s AI kept optimizing calorie tracking—getting better at a solution to a problem the market was moving beyond.

McKinsey found that 70% of digital transformations fail, representing $2.3 trillion in cumulative waste globally over the past decade—programs that optimized historical patterns rather than anticipating strategic shifts.

This is Pattern Prison: AI excels at finding patterns in historical data and then optimizing those patterns with increasing sophistication. But if the pattern itself is becoming obsolete, optimization accelerates your journey in the wrong direction.

Alarm bells:

-

AI recommendations consistently reinforce current strategy

-

Your roadmap looks like “current approach, but better”

-

New competitors succeed with fundamentally different models

-

Customer language shifts but your AI-driven personalization doesn’t

Before optimizing based on AI insights, ask: What is this data optimized to detect? What weak signals might it be missing? If we’re wrong about this pattern’s future relevance, what’s the cost?

PATTERN 4: CONTEXT COLLAPSE

Scaling personalization makes it feel less personal

Noom’s original model was intensive: coaches managed 7-15 clients at competitors like Vida Health and Omada. Deep engagement. High touch. Expensive.

AI promised to change the economics. Coaches could manage hundreds of clients with algorithms handling routine interactions.

In practice, it created context collapse.

The AI could personalize based on data—your weight, food logs, and stated goals. What it couldn’t do was understand the context behind the data. It didn’t know you were stress-eating because of a divorce. It couldn’t detect that your “lack of motivation” was actually depression. It missed that your goal weight was driven by body dysmorphia, not health.

One user reported, “The AI would say stuff like ‘Great logging!’ when I’d just binged 3000 calories. It was responding to the behavior (logging consistently) without understanding the context (disordered eating).”

The personalization was technically accurate and completely tone-deaf.

This is why Noom’s expansion into GLP-1 medications is revealing. When behavior change coaching becomes commoditized through AI, and when that AI can’t provide the contextual support that made coaching valuable, pivoting to pharmaceutical distribution starts making financial sense—even if it represents a fundamental departure from the founding mission.

Alarm bells:

-

Users call your personalization “generic” despite sophisticated algorithms

-

You’re scaling engagement while sacrificing understanding

-

High-value customers churn to “lower-tech” competitors

-

Complaints reference feeling “not heard” or “not understood”

Before scaling personalization, ask: What context is required to make this advice appropriate? Can our algorithm detect when it lacks crucial context? Where does automation cross from helpful to tone-deaf?

Figure 2: Four Common Misfires

Why This Keeps Happening

After highlighting these four distinct patterns, the obvious question to ask is: “Why do smart companies with good technology make these mistakes repeatedly?

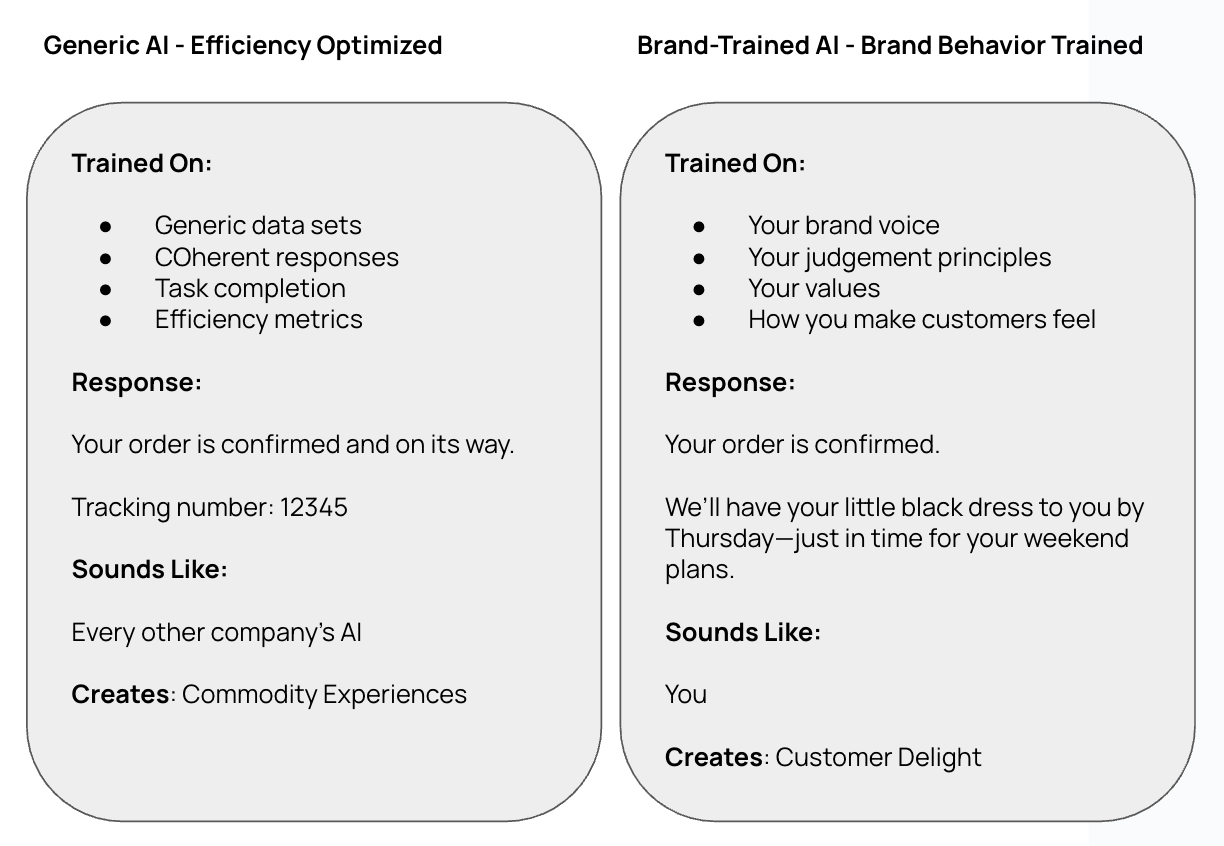

Because they’re using the wrong AI.

Not technically wrong—functionally wrong. They’re deploying generic AI trained for efficiency when they need AI trained to behave like their brand.

Noom’s AI was trained to give nutritionally accurate responses. Never trained to coach someone through stress-eating during a divorce.

Oura’s AI was trained to interpret biometric data. Never trained to recognize when obsessing over sleep scores was causing insomnia.

WHOOP’s AI was trained to optimize training recommendations. Never trained to tell the difference between “low recovery, need rest” and “low recovery, need encouragement.”

They automated brand behavior using AI that didn’t know what their brand behavior meant.

I’ve always believed products are what you offer customers. Brands are how you behave toward them.

Generic AI can automate product transactions, data processing, and features.

But automating brand behavior requires AI trained on your brand—your voice, judgment style, values, and the way you make people feel.

Generic AI = trained for coherent, efficient responses

Brand-trained AI = trained to sound like you, think like you, behave like you

When every company uses the same models, automation creates sameness, not advantage.

When your AI is trained on what makes you distinctive, automation can scale your brand—not replace it with efficiency.

The wellness failures happened because companies deployed generic AI for brand interactions, then wondered why customers experienced those interactions as generic.

The wellness failures happened because companies deployed generic AI for brand interactions, then wondered why customers experienced those interactions as generic.

Figure 3: The Same Interaction. Two Different Models

How to Make Better Decisions

The four patterns reveal something: AI deployment isn’t “automate everything” or “automate nothing.” It requires judgment.

Here’s the decision framework:

Three Questions for Every AI Deployment:

1. Is this a pain point or a passion point?

Pain point = eliminate it completely

Passion point = protect the engagement, maybe illuminate with AI but never automate the choice

2. If automating, does this need to sound like us?

Generic efficiency = use standard models, optimize for speed and cost

Brand behavior = train AI on your distinctive voice, values, judgment style

3. Can AI actually handle this?

Some decisions require human judgment regardless of AI sophistication:

-

High-stakes outcomes (health crises, termination decisions)

-

Moral judgment about human life

-

Creative vision setting

-

Situations where algorithmic error has severe consequences

Simple Rules:

AUTOMATE COMPLETELY when customers want something eliminated entirely and brand distinctiveness doesn’t matter. Use generic AI optimized for efficiency.

AUGMENT INTELLIGENTLY when decisions require both computational power and human wisdom. Use brand-trained AI to enhance human judgment, not replace it.

ILLUMINATE ONLY when customers value making the choice themselves. Use brand-trained AI to surface insights, but never make the decision for them.

PROHIBIT ENTIRELY when AI error has severe consequences or automation undermines human dignity and agency.

The intersection is simple:

-

Pain point + generic behavior = automate completely with standard AI

-

Pain point + brand behavior = augment with brand-trained AI

-

Passion point = illuminate only (with brand-trained AI if automating insights)

-

High stakes = prohibit regardless

This prevents the systematic misfires that destroyed billions: companies using generic AI to automate passion points, companies automating decisions requiring brand judgment without training AI on that judgment, and companies optimizing historical patterns without detecting category shifts.

The opportunity isn’t in having better AI. It’s in having better judgment about when AI’s answer is the wrong answer and what type of AI is appropriate for different interactions.

What This Means for Leaders

The question isn’t whether to embrace AI. That decision has been made by competitive pressure, investor expectations, and customer behavior.

The question is whether you can deploy AI for advantage rather than creating the systematic vulnerabilities that destroyed billions in market value between 2023 and 2025.

Most companies are building AI capabilities—hiring data scientists, implementing algorithms, and optimizing operations.

Far fewer are building the judgment to know when AI’s answer is the wrong answer and how to train AI to behave like their brand.

This gap creates opportunity.

While competitors automate indiscriminately with generic models, you can differentiate on two dimensions:

Strategic restraint: Knowing when not to automate, protecting passion points, while optimizing pain points.

Brand intelligence: When you do automate, deploy AI trained on your distinctive behavior rather than generic efficiency.

The combination is powerful. While competitors scale personalization that feels impersonal, you protect human moments AND ensure your AI interactions feel authentically you. While they optimize for measurable proxies with commodity AI, you compete on what customers actually value using brand-trained intelligence.

The wellness failures aren’t cautionary tales about the future—they’re competitive intelligence about the present. Every pattern represents a current vulnerability in organizations racing to deploy AI without this judgment.

The question is whether you’ll learn from their $62 million settlements and 45% workforce reductions before making similar mistakes, or whether you’ll exploit their over-automation while they’re distracted by efficiency metrics.

The Real Competitive Advantage

There’s a huge irony here.

AI was supposed to democratize capability—giving every organization access to sophisticated analysis, personalization, and optimization. And it has done exactly that.

But democratized capability doesn’t create differentiation.

When everyone has access to similar AI tools, trained on similar data, optimizing for similar metrics, the result isn’t competitive advantage—it’s competitive convergence.

The new scarcity isn’t AI capability. It’s knowing when not to use it.

The companies winning with AI share three characteristics:

First, they recognize the patterns. They can identify when they’re in a Friction Fallacy, Optimization Trap, Pattern Prison, or Context Collapse—and when they’ve deployed generic AI for interactions requiring brand intelligence. This lets them course-correct before small errors become strategic failures.

Second, they’ve built simple decision tools. They don’t ask, “can AI do this?” in isolation. They systematically ask: Should this be automated completely, augmented intelligently, illuminated only, or prohibited entirely? Does this require brand-trained AI or is generic efficiency sufficient? This prevents ad hoc automation that accidentally destroys customer value.

Third, they have the confidence to override algorithms. When AI recommends a strategy that optimizes historical patterns, they ask, “What is this data missing?” When efficiency metrics improve while satisfaction stagnates, they investigate rather than celebrate. When generic AI is cheaper but brand-trained AI better serves customers, they invest in differentiation.

This isn’t Luddism or nostalgia. It’s strategic sophistication.

It’s recognizing that your competitors are making systematic mistakes right now—automating passion points, optimizing for wrong metrics, removing friction that creates meaning, deploying generic AI for brand interactions—and those mistakes create openings.

The window to build differentiation around strategic AI deployment is open. But it’s closing as more organizations develop this judgment.

The question is whether you’ll move quickly enough to capitalize on competitors’ misfires while building the discipline that prevents your own.

If You’re Still With Me, Here’s What To Do Next

If you’ve recognized your organization in any of the four patterns, if you’re deploying generic AI for brand interactions, or if you’re uncertain whether your AI investments are creating advantage or vulnerability, there’s a systematic way to find out.

The AI Integration Audit evaluates your current AI deployments to identify:

-

Where you’re optimizing pain points while being blind to passion points

-

Where you’re using generic AI for interactions requiring brand intelligence

-

Where your automation strategy creates openings for competitors

-

Where competitors’ over-automation creates market space you can exploit

-

Which decisions need human judgment that you’ve accidentally algorithmatized

The audit takes about a couple of weeks and involves interviews with leadership, analysis of customer feedback, systematic evaluation of AI deployments across customer journey stages, and assessment of whether your AI reflects your brand behavior or generic optimization.

You get a prioritized action plan: which automations to accelerate, which to redesign, which to prohibit, where strategic restraint creates differentiation, and which AI systems need brand training rather than generic models.

The Integration Workshop is a two-day intensive where your leadership team learns to apply this decision framework to live choices and understand how to develop brand-trained AI rather than deploying generic models. You work through actual AI deployment choices your organization faces. You leave with frameworks you can apply immediately and the confidence to override algorithms when judgment demands it.

Retained Integration Advisory is for organizations implementing comprehensive AI strategies that need ongoing counsel. This involves quarterly strategic reviews, participation in major AI deployment decisions, guidance on training AI to reflect brand behavior, and building internal capabilities so your team can make sound judgments independently.

The goal isn’t dependence—it’s capability transfer. Building your organization’s capacity to navigate AI deployment with judgment, ensuring your AI amplifies what makes you distinctive rather than making you generically efficient.

A Final Thought

The wellness technology companies documented here didn’t fail because they lacked technical talent, capital, or ambition.

They failed because they confused optimization with strategy.

They solved problems efficiently without asking whether those problems mattered to customers. They automated friction without distinguishing between obstacles and engagement. They deployed generic AI for brand interactions without recognizing that how brands behave is what creates lasting value.

Their failures prove that in categories where customer value depends on meaning, context, or human connection, strategic judgment matters more than algorithmic sophistication, and brand intelligence matters more than generic optimization.

You don’t need to be the organization with the most advanced AI.

You need to be the organization that knows when AI’s answer is the wrong answer, which type of AI serves which purpose, and has the confidence to act on that knowledge.

The companies that thrive over the next decade won’t be those that automated most aggressively or deployed the most sophisticated models.

They’ll be those that deployed most wisely—leveraging AI’s strengths while protecting the human moments that create lasting value and ensuring their AI behaves like their brand rather than like everyone else’s AI.

Adrian Barrow

Catalyst Strategy

Helping CMOs and CXOs deploy AI strategically—knowing when to automate, when to augment, and when to protect the human moments that create lasting value.