Google I/O 2025 was dedicated to AI.

At its annual developer conference, Google announced updates that put more AI into Search, Gmail, and Chrome. Its AI models were updated to be better at making images, taking actions, and writing code.

And while there wasn’t a big Android presence in the main keynote, Google had plenty to announce about its OS last week, including a redesign and updates to its device tracking hub.

Read below for all of the news and updates from Google I/O 2025.

-

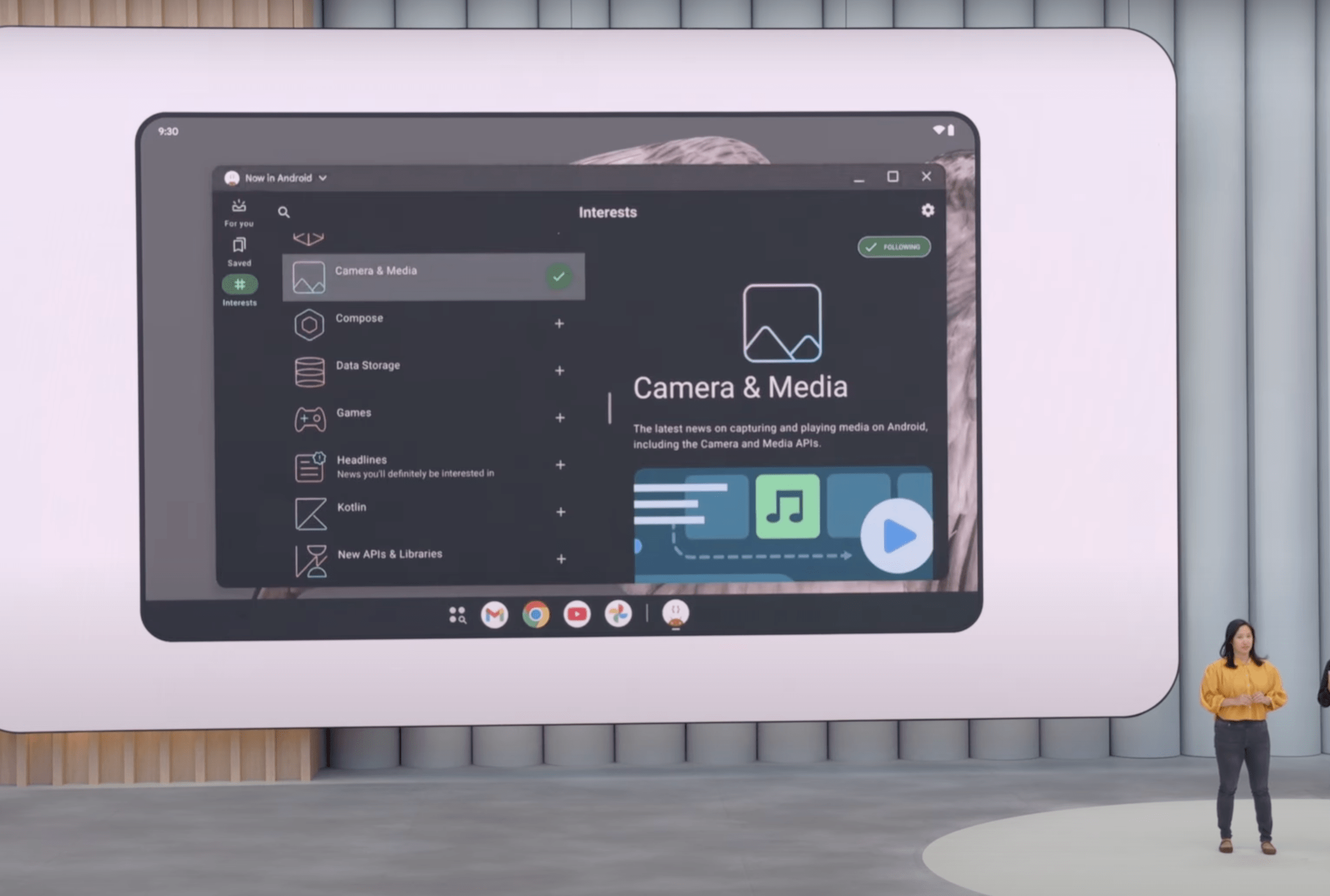

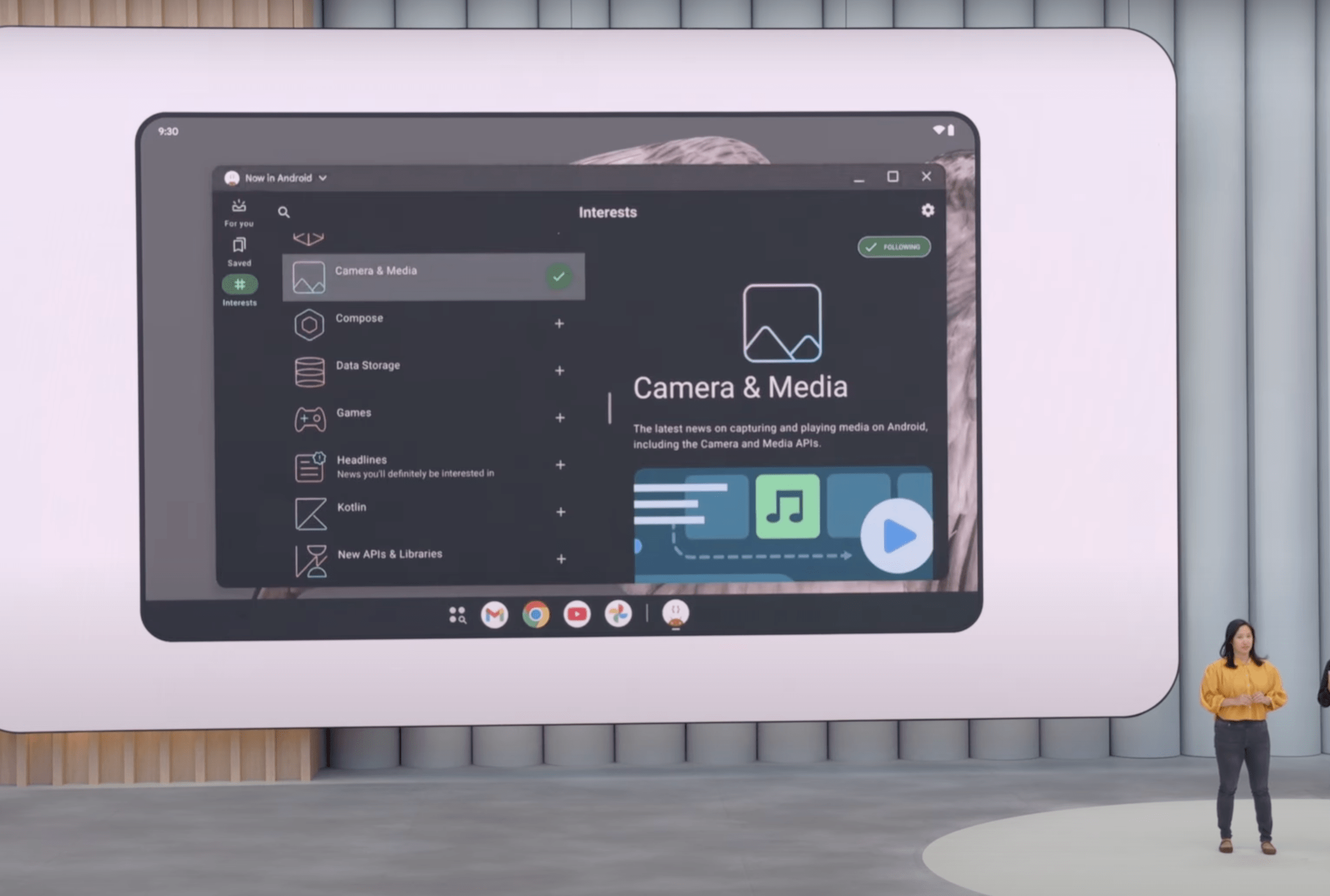

Google is working with Samsung to bring a desktop mode to Android. During Google I/O’s developer keynote, engineering manager Florina Muntenescu said the company is “building on the foundation” of Samsung’s DeX platform “to bring enhanced windowing capabilities in Android 16,” as spotted earlier by 9to5Google.

Samsung first launched DeX in 2017, a feature that automatically adjusts your phone’s interface and apps when connected to a larger display, allowing you to use your phone like a desktop device.

-

Google’s AI models have a secret ingredient that’s giving the company a leg up on competitors like OpenAI and Anthropic. That ingredient is your data, and it’s only just scratched the surface in terms of how it can use your information to “personalize” Gemini’s responses.

Google first started letting users opt in to its “Gemini with personalization” feature earlier this year, which lets the AI model tap into your search history “to provide responses that are uniquely insightful and directly address your needs.” But now, Google is taking things a step further by unlocking access to even more of your information — all in the name of providing you with more personalized, AI-generated responses.

-

Google I/O was, as predicted, an AI show. But now that the keynote is over, we can see that the company’s vision is to use AI to eventually do a lot of Googling for you.

A lot of that vision rests on AI Mode in Google Search, which Google is starting to roll out to everyone in the US. AI Mode offers a more chatbot-like interface right inside Search, and behind the scenes, Google is doing a lot of work to pull in information instead of making you scroll through a list of blue links.

-

Sergey Brin on our world possibly existing within “a stack of simulations.”

Sergey Brin on our world possibly existing within “a stack of simulations.”The last question during the AI fireside chat at I/O 2025 was an invitation to make headlines, and the Google co-founder did his best, saying… something about reality and our existence. Listen in for yourself.

-

Sergey Brin: “Anyone who is a computer scientist should not be retired right now.”

Sergey Brin: “Anyone who is a computer scientist should not be retired right now.”Brin showed up to crash Google DeepMind CEO Demis Hassabis’ fireside chat at I/O 25, where he laid out what he does all day when asked by host Alex Kantrowitz.

The answer? “I think I torture people like Demis, who is amazing, by the way.” ”…there’s just people who are working on the key Gemini text models, on the pretraining, post training. Mostly those, I periodically delve into some of the multi-modal work.”

-

Shorter and longer NotebookLM AI podcasts.

Shorter and longer NotebookLM AI podcasts.You can now have NotebookLM make you Audio Overviews that are short (around 5 minutes) and long (around 20 minutes) in addition to the default length of around 10 minutes.

-

Sergey Brin deals with a busted AI demo at I/O.

Sergey Brin deals with a busted AI demo at I/O.The Google co-founder has said he was “pretty much retired right around the start of the pandemic,” but came back to the company to experience the AI revolution.

This afternoon, we spotted him troubleshooting problems with this demo of Google Flow, which the company announced today as “the only AI filmmaking tool custom-designed for Google’s most advanced models — Veo, Imagen, and Gemini.”

-

Here in sunny Mountain View, California, I am sequestered in a teeny-tiny box. Outside, there’s a long line of tech journalists, and we are all here for one thing: to try out Project Moohan and Google’s Android XR smart glasses prototypes. (The Project Mariner booth is maybe 10 feet away and remarkably empty.)

While nothing was going to steal AI’s spotlight at this year’s keynote — 95 mentions! — Android XR has been generating a lot of buzz on the ground. But the demos we got to see here were notably shorter, with more guardrails, than what I got to see back in December. Probably because, unlike a few months ago, there are cameras everywhere and these are “risky” demos.

-

It’s Dieter again!

It’s Dieter again![Insert Leonardo DiCaprio pointing meme here, which I should really have saved last time!!!]

-

Darren Aronofsky is involved in a new film with AI-generated visuals.

Darren Aronofsky is involved in a new film with AI-generated visuals.The film, called Ancestra, “is directed by Eliza McNitt and blends emotional live-action performances with generative visuals, crafting a deeply personal narrative inspired by the day she was born,” according to a description from the movie’s trailer.

-

AI Overviews are going global.

AI Overviews are going global.Sure, they tell you to eat rocks and put glue on your pizza, but Google says AI Overviews are a smash, and it’s expanding the feature to a bunch of new countries and languages. They’re now available in more than 200 countries and more than 40 languages, Google says — and they’re starting to appear on more and more queries, too.

-

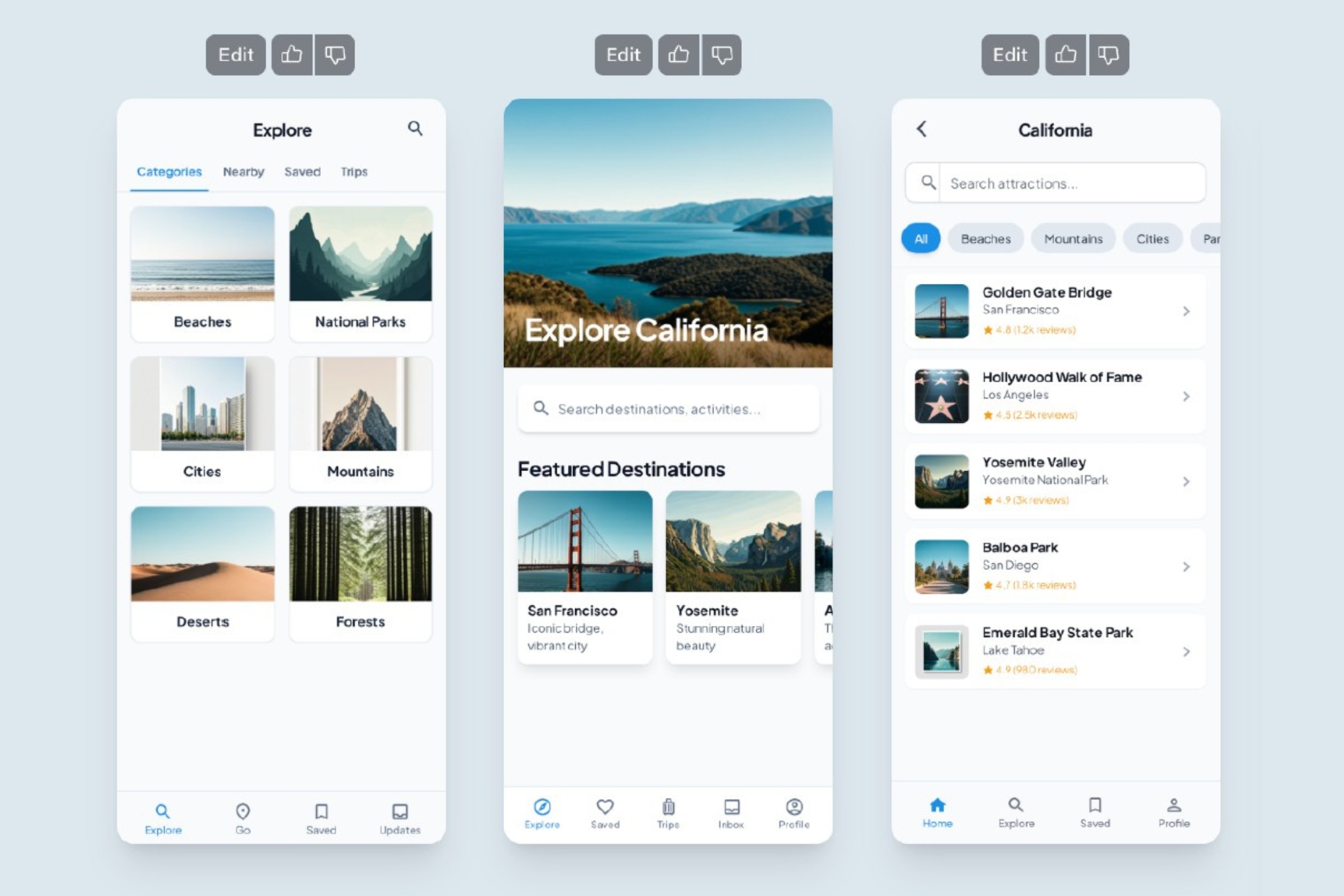

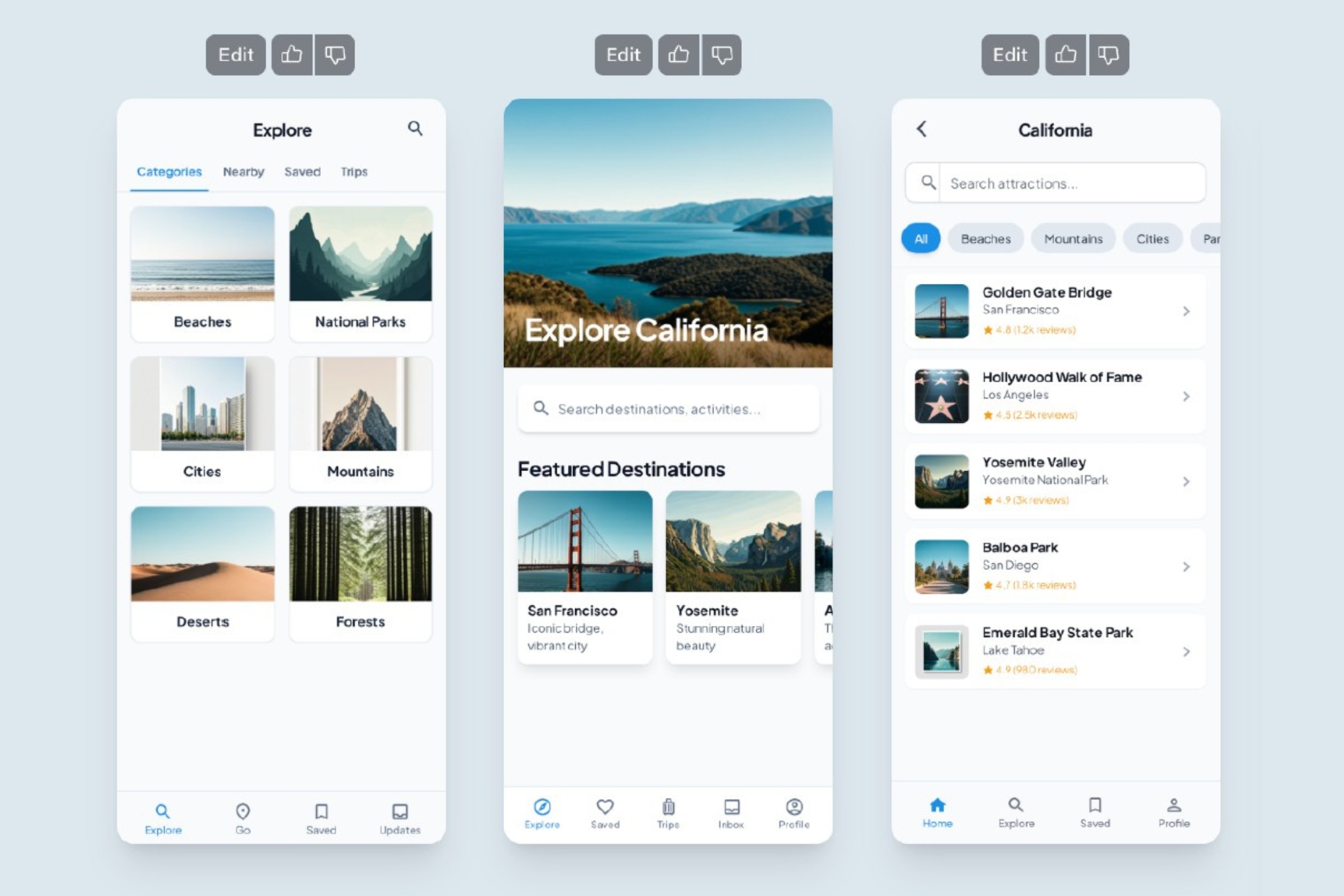

Google is launching a new generative AI tool that helps developers swiftly turn rough UI ideas into functional, app-ready designs. The Gemini 2.5 Pro-powered “Stitch” experiment is available on Google Labs and can turn text prompts and reference images into “complex UI designs and frontend code in minutes,” according to the announcement during Google’s I/O event, sparing developers from manually creating design elements and then programming around them.

Stitch generates a visual interface based on selected themes and natural language descriptions, which are currently supported in English. Developers can provide details they would like to see in the final design, such as color palettes or the user experience. Visual references can also be uploaded to guide what Stitch generates, including wireframes, rough sketches, and screenshots of other UI designs.

-

At its I/O developer conference today, Google announced two new ways to access its AI-powered “Live” mode, which lets users search for and ask about anything they can point their camera at. The feature will arrive in Google Search as part of its expanded AI Mode and is also coming to the Gemini app on iOS, having been available in Gemini on Android for around a month.

The camera-sharing feature debuted at Google I/O last year as part of the company’s experimental Project Astra, before an official rollout as part of Gemini Live on Android. It allows the company’s AI chatbot to “see” everything in your camera feed, so you can have an ongoing conversation about the world around you — asking for recipe suggestions based on the ingredients in your fridge, for example.

-

Since it was first demoed in 2021, Project Starline has felt like the kind of thing only a company like Google would bother trying to build: a fancy 3D video booth with no near-term commercial prospects that promises to make remote meetings feel like real life.

Now, Starline is nearly ready for primetime. It’s being rebranded to Google Beam and coming to a handful of offices later this year. Google has managed to shrink the technology into something it says will be priced comparably to existing videoconference systems. The real bet is that other companies will want to make their own hardware for Beam calls. “The devices aren’t really the point,” says Andrew Nartker, the project’s general manager. “The point is that we can beam things anywhere we need to with the infrastructure that we built.”

-

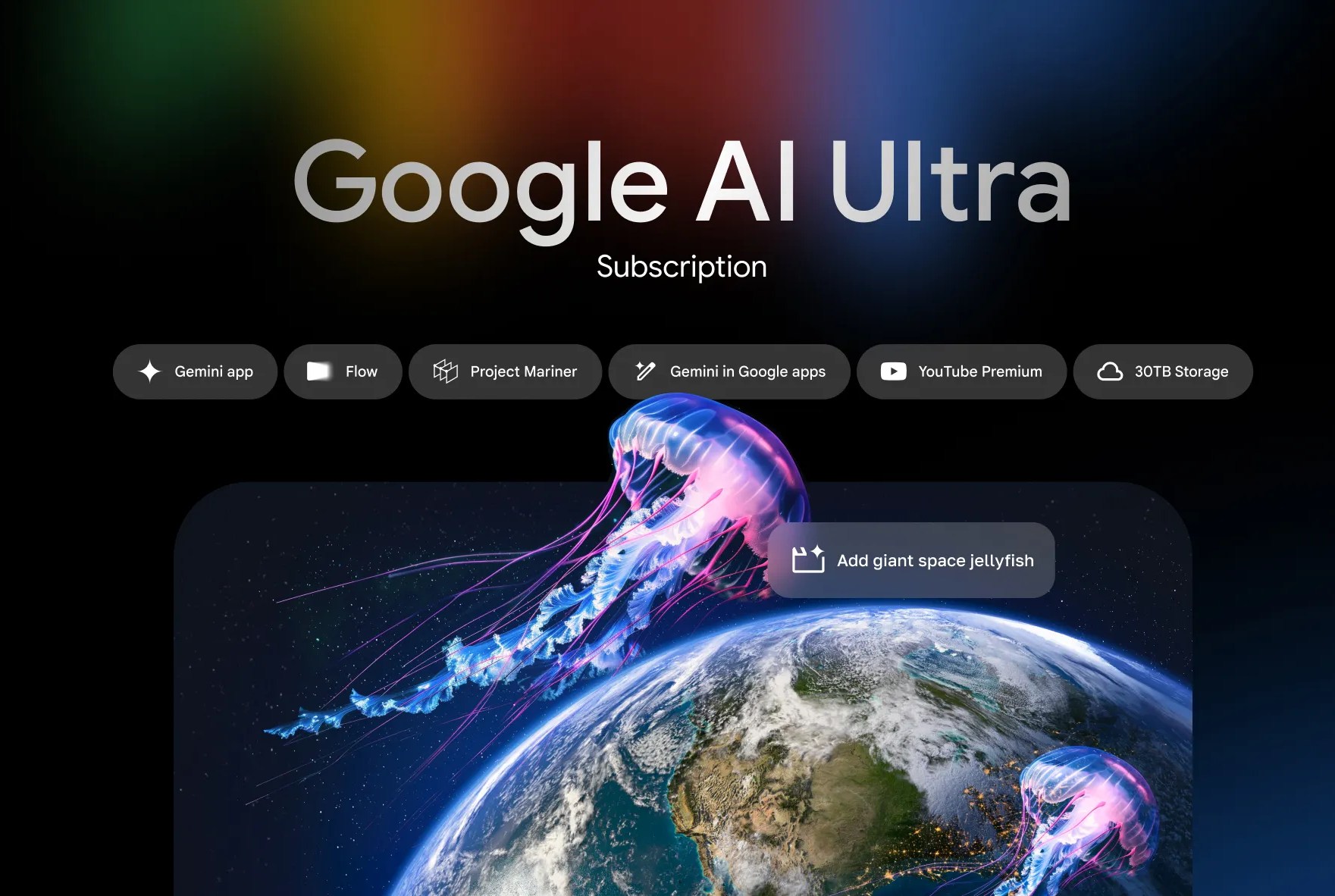

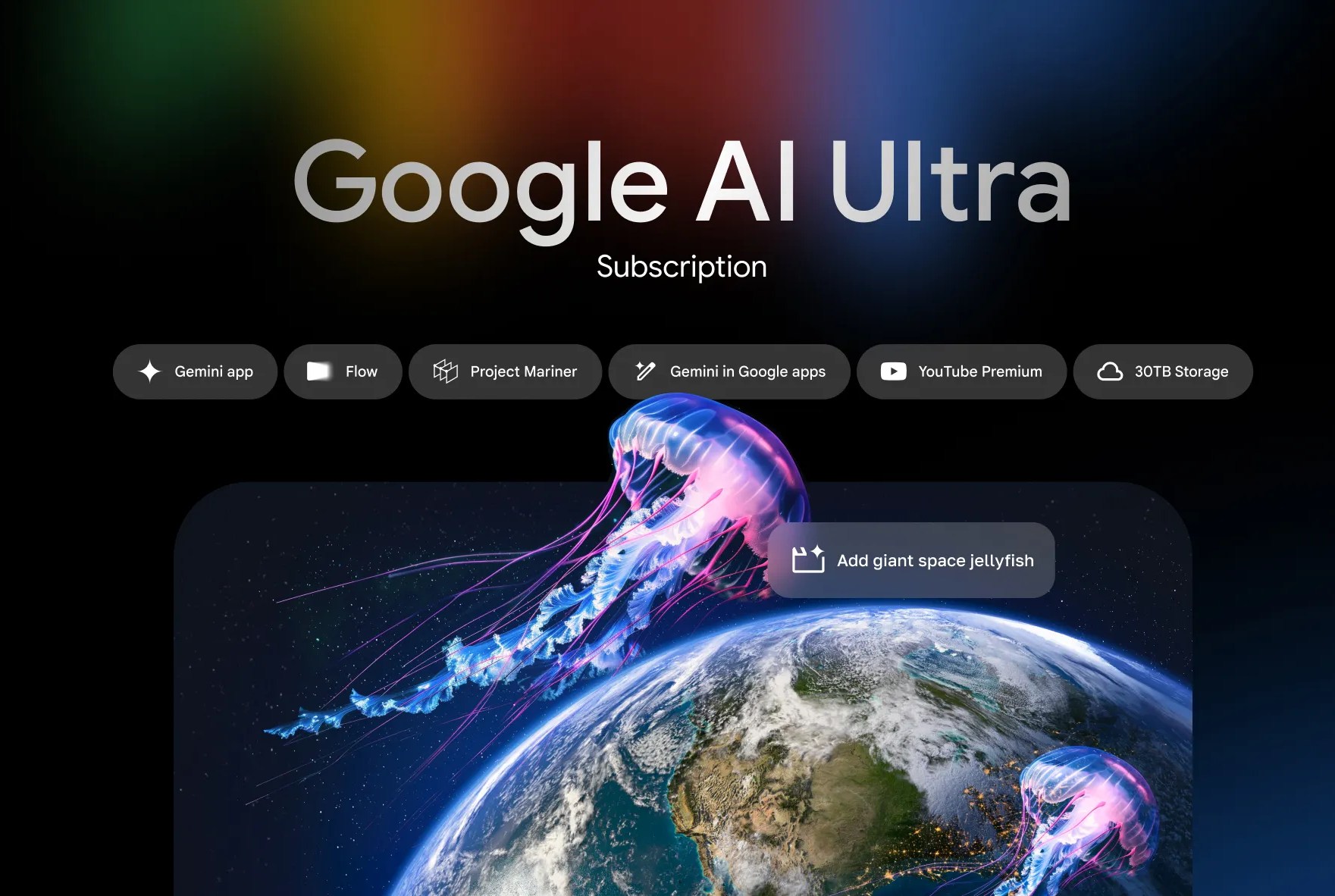

Google has announced a new AI subscription plan with access to the company’s most advanced models — and it costs $249.99 per month. The new “AI Ultra” plan also offers the highest usage limits across Google’s AI apps, including Gemini, NotebookLM, Whisk, and its new AI video generation tool, Flow.

The AI Ultra plan lets users try Gemini 2.5 Pro’s new enhanced reasoning mode, Deep Think, which is designed for “highly complex” math and coding. It offers early access to Gemini in Chrome, too, allowing subscribers to complete tasks and summarize information directly within their browser with AI.

-

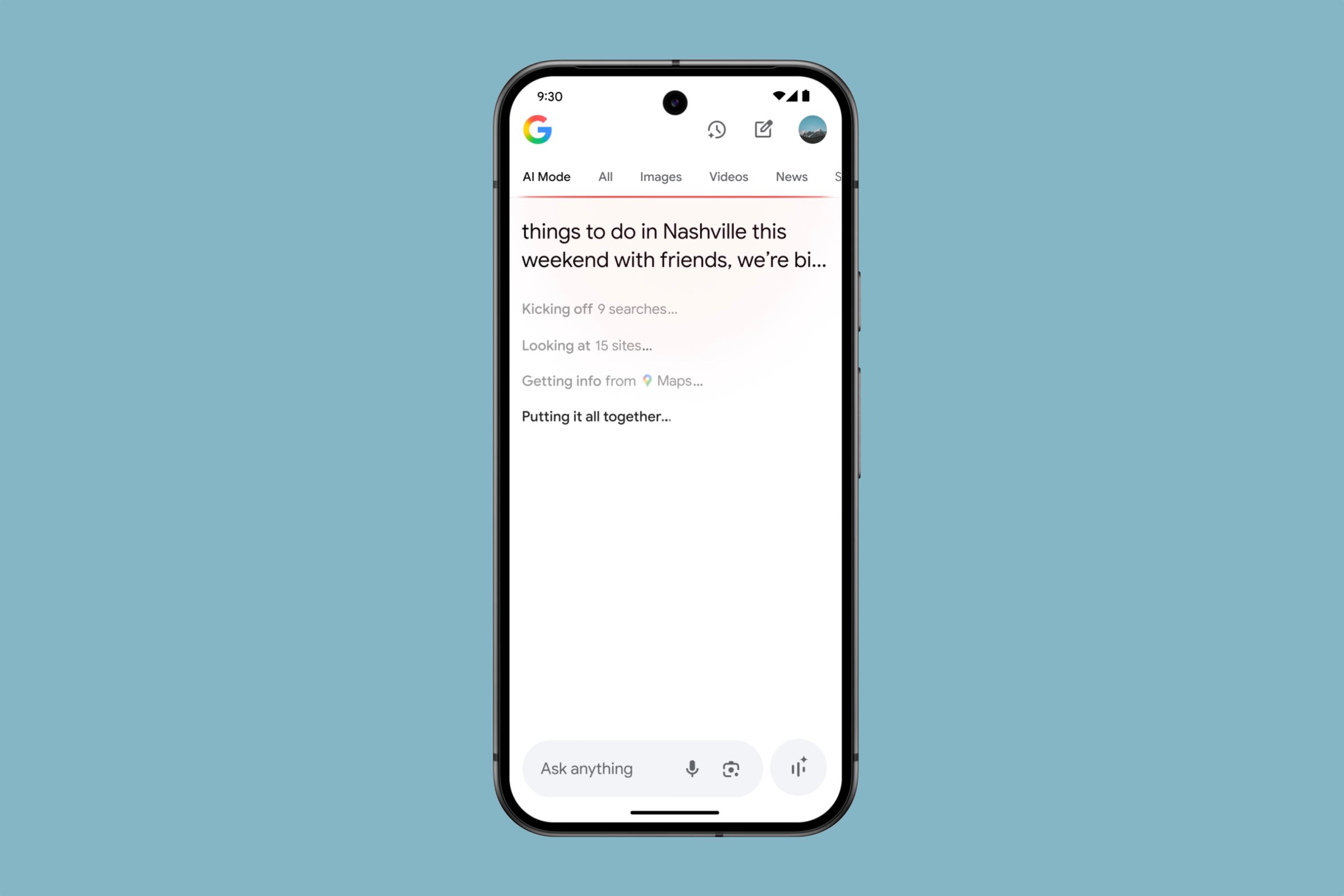

There’s a new tab in Google Search. You might have seen it recently. It’s called AI Mode, and it brings a Gemini- or ChatGPT-style chatbot right into your web search experience. You can use it to find links, but also to quickly surface information, ask follow-up questions, or ask Google’s AI models to synthesize things in ways you’d never find on a typical webpage.

For now, AI Mode is just an option inside of Google Search. But that might not last. At its I/O developer conference on May 20th, Google announced that it is rolling AI Mode out to all Google users in the US, as well as adding several new features to the platform. In an interview ahead of the conference, the folks in charge of Search at Google made it very clear that if you want to see the future of the internet’s most important search engine, then all you need to do is tab over to AI Mode.

-

Since its original launch at Google I/O 2024, Project Astra has become a testing ground for Google’s AI assistant ambitions. The multimodal, all-seeing bot is not a consumer product, really, and it won’t soon be available to anyone outside of a small group of testers. What Astra represents instead is a collection of Google’s biggest, wildest, most ambitious dreams about what AI might be able to do for people in the future. Greg Wayne, a research director at Google DeepMind, says he sees Astra as “kind of the concept car of a universal AI assistant.”

Eventually, the stuff that works in Astra ships to Gemini and other apps. Already that has included some of the team’s work on voice output, memory, and some basic computer-use features. As those features go mainstream, the Astra team finds something new to work on.

-

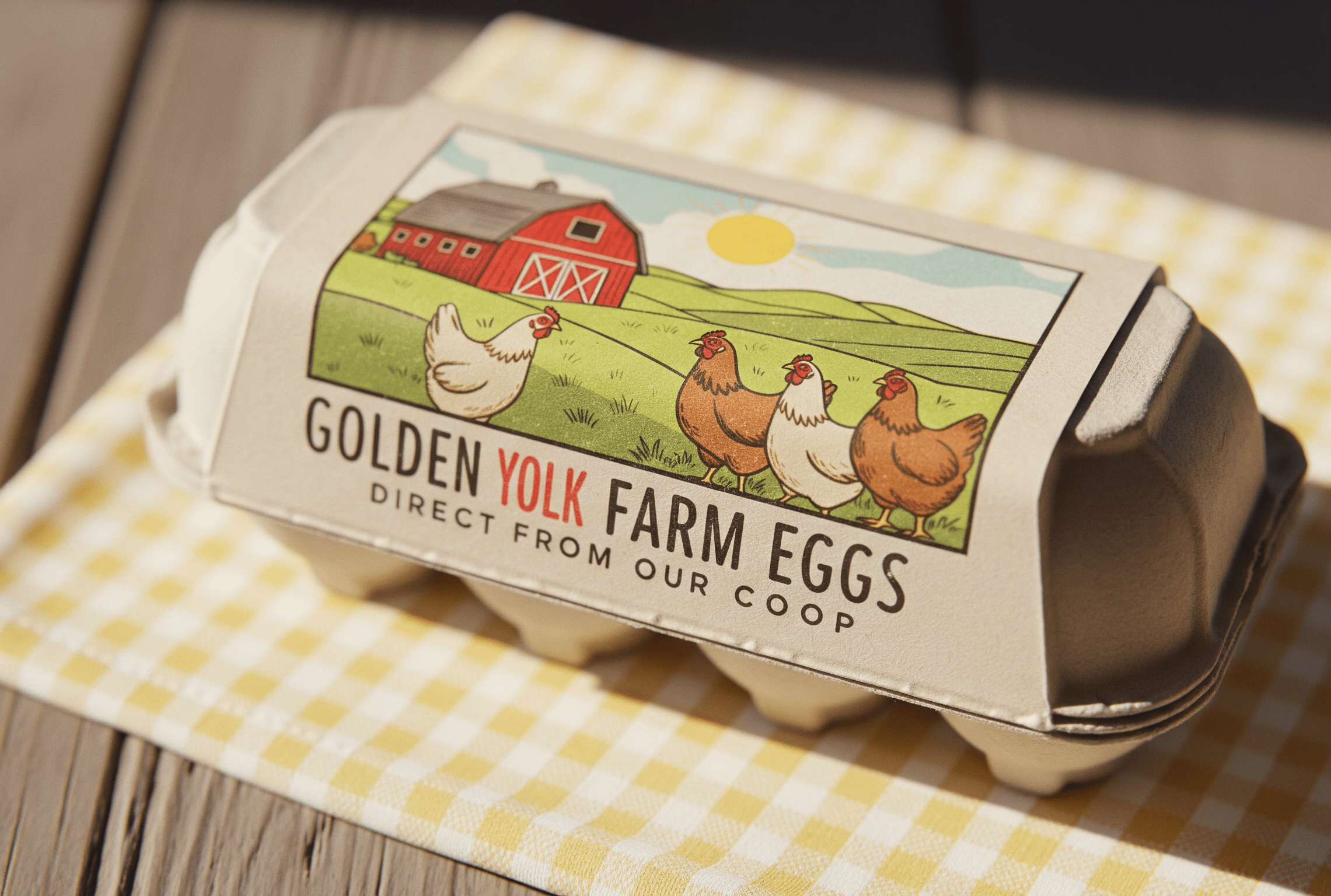

Google is launching a new version of its image generation model, called Imagen 4, and the company says that it offers “stunning quality” and “superior typography.”

“Our latest Imagen model combines speed with precision to create stunning images,” Eli Collins, VP of product at Google Deepmind, says in a blog post. “Imagen 4 has remarkable clarity in fine details like intricate fabrics, water droplets, and animal fur, and excels in both photorealistic and abstract styles.” Sample images from Google do show some impressive, realistic detail, like one showing a whale jumping out of the water and another of a chameleon.

-

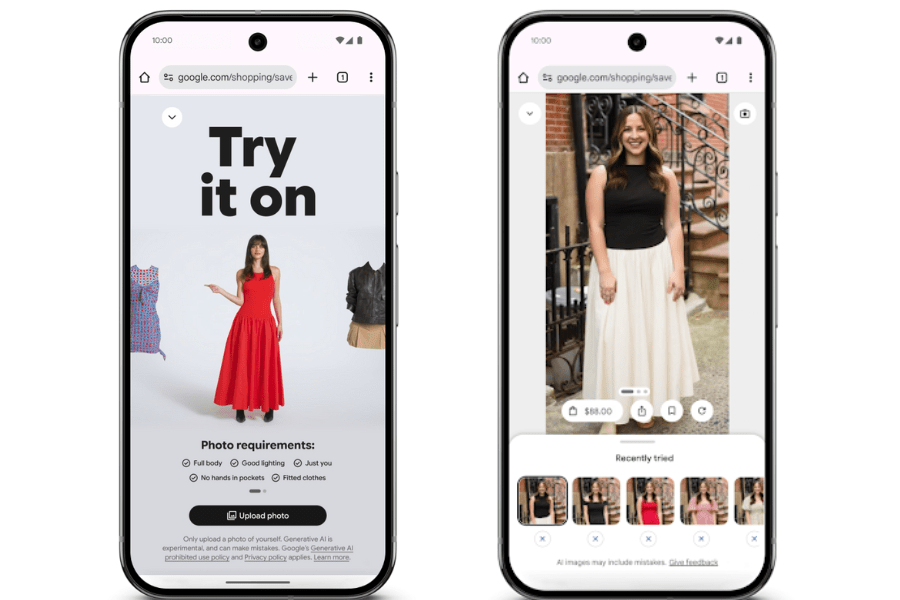

Google is taking its virtual try-on feature to a new level. Instead of seeing what a piece of clothing might look like on a wide range of models, it’s now testing a feature that lets you upload a photo of yourself to see how it might look on you.

The new feature is rolling out in Search Labs in the US today. Once you opt into the experiment, you can check it out by selecting the “try it on” button next to pants, shirts, dresses, and skirts that appear in Google’s search results. Google will then ask for a full-length photo, which the company will use to generate an image of you wearing the piece of clothing you’re shopping for. You can save and share the images.

-

Google is going to let Chrome’s password manager automatically change your password when it detects one that is weak, the company announced at its Google I/O conference.

“When Chrome detects a compromised password during sign-in, Google Password Manager prompts the user with an option to fix it automatically,” according to a blog post. “On supported websites, Chrome can generate a strong replacement and update the password for the user automatically.”

-

Google wants to make it easier to create AI-generated videos, and it has a new tool to do it. It’s called Flow, and Google is announcing it alongside its new Veo 3 video generation model, more controls for its Veo 2 model, and a new image generation model, Imagen 4.

With Flow, you can use things like text-to-video prompts and ingredients-to-video prompts (basically, sharing a few images that Flow can use alongside a prompt to help inform the model what you’re looking for) to build eight-second AI-generated clips. Then, you can use Flow’s scenebuilder tools to stitch multiple clips together.

-

Google’s second era of smart glasses is off to a chic start. At its I/O developer conference today, Google announced that it’ll be partnering with Samsung, Gentle Monster, and Warby Parker to create smart glasses that people will actually want to wear.

The partnership hints that Google is taking style a lot more seriously this time around. Warby Parker is well known as a direct-to-consumer eyewear brand that makes it easy to get trendy glasses at a relatively accessible price. Meanwhile, Gentle Monster is currently one of the buzziest eyewear brands that isn’t owned by EssilorLuxottica. The Korean brand is popular among Gen Z, thanks in part to its edgy silhouettes and the fact that Gentle Monster is favored by fashion-forward celebrities like Kendrick Lamar, Beyoncé, Rihanna, Gigi Hadid, and Billie Eilish. Partnering with both brands seems to hint that Android XR is aimed at both versatile, everyday glasses as well as bolder, trendsetting options.

-

The Google smart glasses era is back, sort of. Today, Google and Xreal announced a strategic partnership for a new Android XR device called Project Aura at the Google I/O developer conference.

This is officially the second Android XR device since the platform was launched last December. The first is Samsung’s Project Moohan, but that’s an XR headset more in the vein of the Apple Vision Pro. Project Aura, however, is firmly in the camp of Xreal’s other gadgets. The technically accurate term would be “optical see-through XR” device. More colloquially, it’s a pair of immersive smart glasses.

-

Google is bringing an “Agent Mode” to the Gemini app and making some updates to its Project Mariner tool, CEO Sundar Pichai announced at Google I/O 2025.

Project Mariner, Google’s AI agent tool that can search the web for you, can now oversee up to 10 simultaneous tasks, Pichai said.

-

Writing code is the killer chatbot app, and Google knows it.

Writing code is the killer chatbot app, and Google knows it.In addition to touting Gemini 2.5 as a coding tool, Google just released a public beta for Jules, a “coding agent” that can work in the background fixing bugs and writing new features in your codebase. It’s a lot like what you can do with GitHub Copilot. Jules can even make an audio summary of its changes — so, a podcast about code commits? Jules has been in Google Labs for a few months, and is free to use during the beta period.